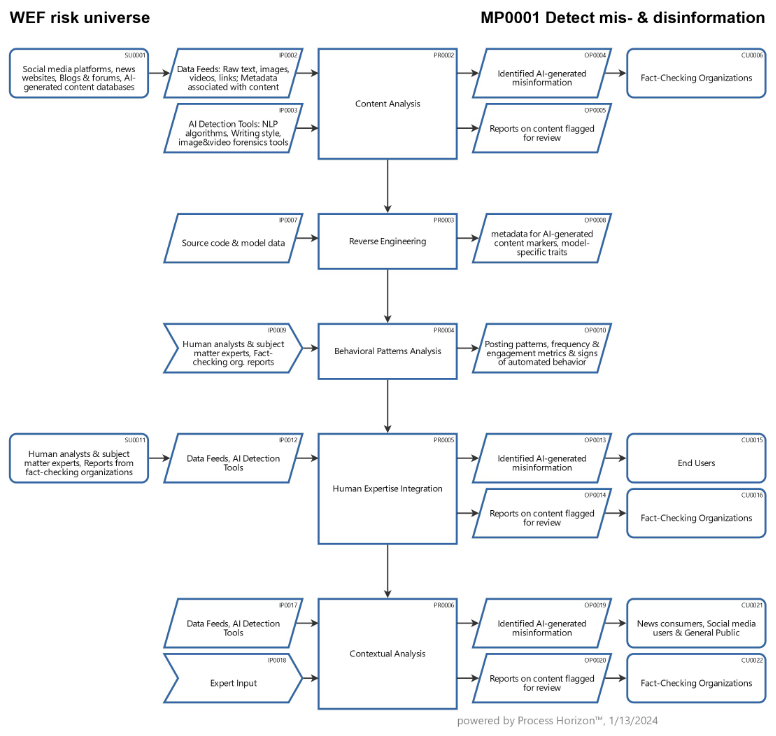

An interactive process to detect misinformation by AI

Misinformation and disinformation were identified as the most severe global risk over the next two years by the World Economic Forum WEF.

Detecting misinformation and disinformation generated by AI poses additional challenges as AI-generated content can mimic human-generated content more convincingly. The process involves a combination of technological solutions, human oversight and ongoing research.

1. Content Analysis

- Analyze writing style: AI-generated content may lack the subtle nuances of human expression. Tools that analyze writing style and syntax can help identify anomalies.

- Assess coherence: Evaluate the logical flow and coherence of the content. AI may produce content that lacks logical consistency.

2. Reverse Engineering

- Investigate the source code: Analyze the source code or metadata associated with the content to identify patterns or markers indicative of AI-generated content.

- Identify model-specific traits: Different AI models may exhibit specific characteristics in their output. Researchers may develop methods to identify these traits.

3. Behavioral Patterns

- Monitor behavioral patterns: Track the behavior of accounts or sources suspected of AI-generated content. Unusual posting patterns, high frequency, or 24/7 activity may be indicative of automation.

- Analyze engagement: Look for signs of automated engagement, such as likes, shares or comments that lack genuine human interaction.

4. AI-Generated Content Detection Tools

- Develop and utilize specialized tools: Researchers and organizations are working on tools specifically designed to detect AI-generated content. These tools may use machine learning models trained on known AI-generated samples.

- Collaborate with the AI community: Engage with researchers and experts in the field of artificial intelligence to stay updated on the latest developments in AI detection.

5. Image and Video Analysis

- Apply forensic analysis: Employ forensic techniques to analyze images and videos for signs of manipulation or AI-generated content.

- Leverage deepfake detection tools: Specialized tools and algorithms can help identify deepfakes and other AI-generated visual content.

6. Human Expertise

- Involve human experts: Combine automated tools with human expertise to assess the nuances of content. Humans can often detect subtle cues that automated systems may miss.

- Establish expert panels: Form expert panels or collaborate with professionals from diverse fields to evaluate the credibility of information.

7. Contextual Analysis

- Consider the context: Evaluate the context in which AI-generated content appears. Misinformation often thrives when shared out of context.

- Verify information with reliable sources: Cross-reference AI-generated content with information from trusted and established sources.

8. Ongoing Research and Adaptation

- Stay updated on AI advancements: The field of AI is constantly evolving. Stay informed about new AI models and techniques to adapt detection methods accordingly.

- Continuous improvement: Iterate and improve detection methods based on ongoing research and the emergence of new AI technologies.

Combating AI-generated misinformation and disinformation requires a multi-faceted approach that combines technological solutions, human judgment, and collaborative efforts within the research and cybersecurity communities. The landscape is dynamic and vigilance is essential to stay ahead of emerging threats.

In support of responsible AI product development and trustworthy deployment, define its process context and generate an end-to-end process map via the smart ProcessHorizon web app https://processhorizon.com