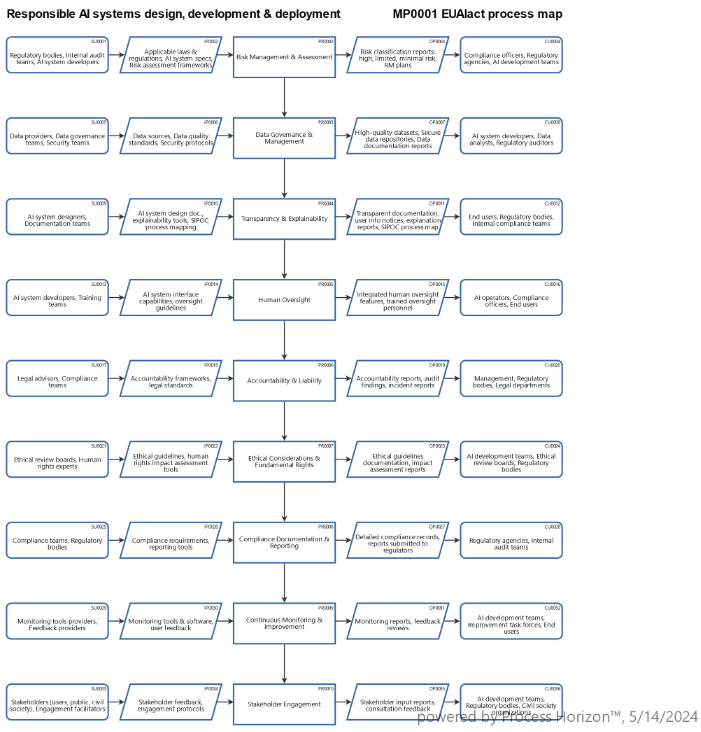

Responsible AI systems design, development & deployment

To ensure compliance with the EU Artificial Intelligence Act (EU AI Act), organizations need to implement several processes, practices and governance structures. These processes are designed to meet the requirements laid out in the act and to ensure that AI systems are developed, deployed and operated in a way that is safe, transparent and respectful of fundamental rights.

1. Risk Management & Assessment

- Classify AI Systems: Categorize AI systems based on the risk they pose as e.g. high-risk, limited risk, minimal risk.

- Conduct Risk Assessments: Identify potential risks associated with AI systems, considering their impact on fundamental rights, safety, security, and societal well-being.

2. Data Governance & Management

- Data Quality Control: Ensure high-quality datasets that are free from biases and errors.

- Data Security: Implement robust data protection measures to prevent unauthorized access or data breaches.

- Data Documentation: Maintain clear documentation of the data used, including its source, characteristics and how it is processed.

3. Transparency & Explainability

- Clear Documentation: Provide detailed information about the AI system's purpose, functioning, and decision-making processes. For a common understanding of the AI system, a SIPOC process map should be provided to all stakeholders.

- User Information: Inform users when they are interacting with an AI system and provide explanations for decisions made by AI systems, particularly in high-risk applications.

4. Human Oversight

- Human-in-the-Loop: Implement processes that allow human intervention in AI decision-making processes, especially for high-risk applications.

- Training and Awareness: Train personnel on how to oversee and interact with AI systems effectively, ensuring they understand potential risks and ethical considerations.

5. Accountability & Liability

- Assign Responsibility: Designate individuals or teams responsible for ensuring compliance with the EU AI Act.

- Internal Audits and Reviews: Regularly audit AI systems and processes to ensure compliance and address any identified issues.

- Incident Reporting: Establish mechanisms for reporting and addressing incidents or failures of AI systems.

6. Ethical Considerations & Fundamental Rights

- Ethical AI Guidelines: Develop and adhere to ethical guidelines that align with the EU AI Act's principles.

- Impact Assessments: Conduct assessments to evaluate the impact of AI systems on fundamental rights and take measures to mitigate any negative impacts.

7. Compliance Documentation & Reporting

- Maintain Records: Keep detailed records of compliance efforts, including risk assessments, data management practices and transparency measures.

- Regulatory Reporting: Provide necessary reports and documentation to regulatory authorities as required by the EU AI Act.

8. Continuous Monitoring & Improvement

- Real-Time Monitoring: Implement monitoring systems to continuously evaluate the performance and behavior of AI systems.

- Feedback Loops: Establish feedback mechanisms to learn from user experiences and system performance, allowing for ongoing improvements.

9. Stakeholder Engagement

- User Engagement: Involve users and other stakeholders in the design and deployment process to ensure AI systems meet their needs and expectations.

- Public Consultation: Engage with the public and civil society organizations to gather input and address concerns related to AI system deployment.

Using the following link you can access this sandbox process model in the ProcessHorizon web app and adapt it to your needs (easy customizing) and export or print the automagically created visual process map as a PDF document or share it with your peers: https://app.processhorizon.com/enterprises/ac7fEtY926vKKu45pPdZoZ8v/frontend